By Dr. Harry Buhrman, Chief Scientist for Algorithms and Innovation, and Dr. Chris Langer, Fellow

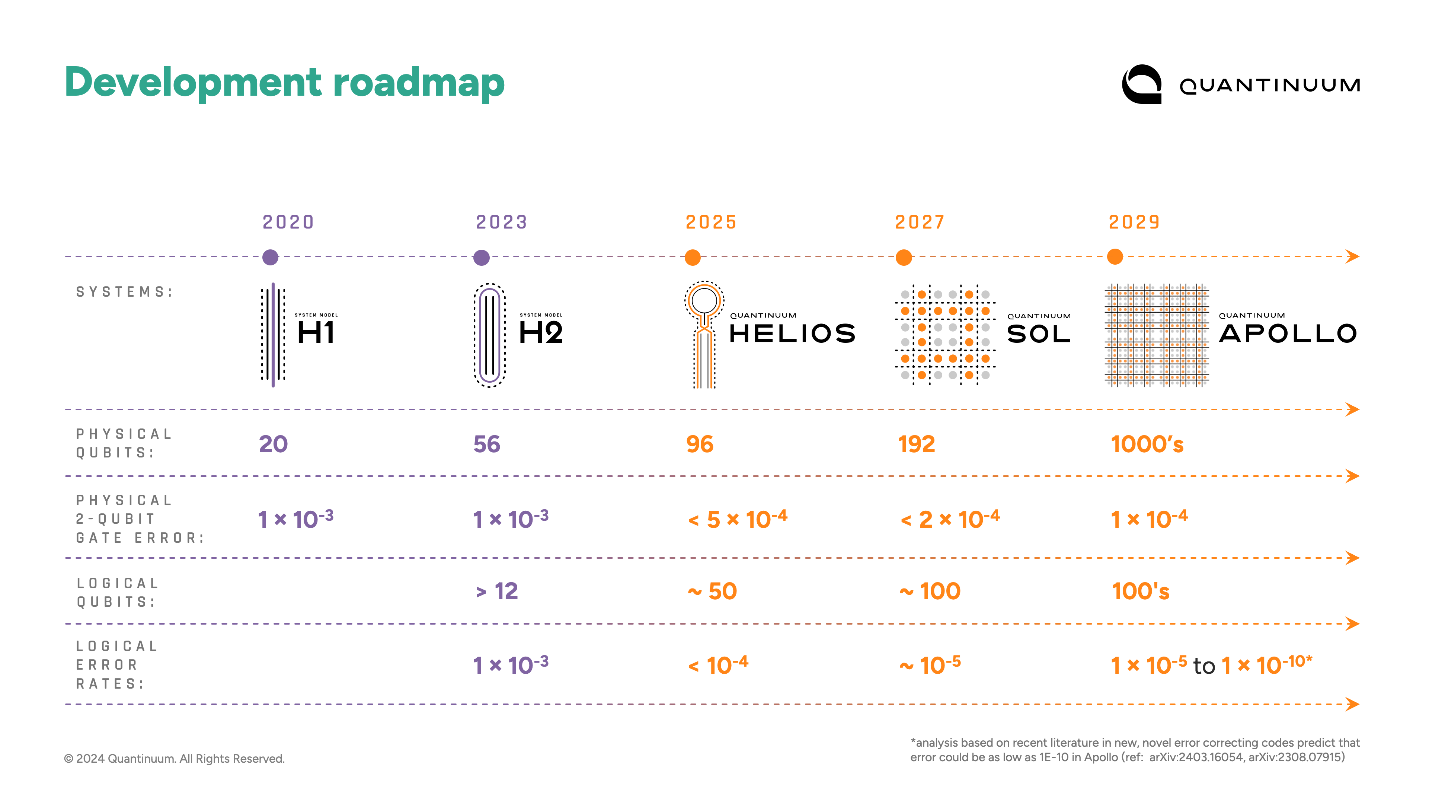

This week, we confirm what has been implied by the rapid pace of our recent technical progress as we reveal a major acceleration in our hardware road map. By the end of the decade, our accelerated hardware roadmap will deliver a fully fault-tolerant and universal quantum computer capable of executing millions of operations on hundreds of logical qubits.

The next major milestone on our accelerated roadmap is Quantinuum Helios™, Powered by Honeywell, a device that will definitively push beyond classical capabilities in 2025. That sets us on a path to our fifth-generation system, Quantinuum Apollo™, a machine that delivers scientific advantage and a commercial tipping point this decade.

What is Apollo?

We are committed to continually advancing the capabilities of our hardware over prior generations, and Apollo makes good on that promise. It will offer:

- thousands of physical qubits

- physical error rates less than 10-4

- All of our most competitive features: all-to-all connectivity, low crosstalk, mid-circuit measurement and qubit re-use

- Conditional logic

- Real-time classical co-compute

- Physical variable angle 1 qubit and 2 qubit gates

- Hundreds of logical qubits

- Logical error rates better than 10-6 with analysis based on recent literature estimating as low as 10-10

By leveraging our all-to-all connectivity and low error rates, we expect to enjoy significant efficiency gains in terms of fault-tolerance, including single-shot error correction (which saves time) and high-rate and high-distance Quantum Error Correction (QEC) codes (which mean more logical qubits, with stronger error correction capabilities, can be made from a smaller number of physical qubits).

Studies of several efficient QEC codes already suggest we can enjoy logical error rates much lower than our target 10-6 – we may even be able to reach 10-10, which enables exploration of even more complex problems of both industrial and scientific interest.

Error correcting code exploration is only just beginning – we anticipate discoveries of even more efficient codes. As new codes are developed, Apollo will be able to accommodate them, thanks to our flexible high-fidelity architecture. The bottom line is that Apollo promises fault-tolerant quantum advantage sooner, with fewer resources.

Like all our computers, Apollo is based on the quantum charged coupled device (QCCD) architecture. Here, each qubit’s information is stored in the atomic states of a single ion. Laser beams are applied to the qubits to perform operations such as gates, initialization, and measurement. The lasers are applied to individual qubits or co-located qubit pairs in dedicated operation zones. Qubits are held in place using electromagnetic fields generated by our ion trap chip. We move the qubits around in space by dynamically changing the voltages applied to the chip. Through an alternating sequence of qubit rearrangements via movement followed by quantum operations, arbitrary circuits with arbitrary connectivity can be executed.

The ion trap chip in Apollo will host a 2D array of trapping locations. It will be fabricated using standard CMOS processing technology and controlled using standard CMOS electronics. The 2D grid architecture enables fast and scalable qubit rearrangement and quantum operations – a critical competitive advantage. The Apollo architecture is scalable to the significantly larger systems we plan to deliver in the next decade.

What is Apollo good for?

Apollo’s scaling of very stable physical qubits and native high-fidelity gates, together with our advanced error correcting and fault tolerant techniques will establish a quantum computer that can perform tasks that do not run (efficiently) on any classical computer. We already had a first glimpse of this in our recent work sampling the output of random quantum circuits on H2, where we performed 100x better than competitors who performed the same task while using 30,000x less power than a classical supercomputer. But with Apollo we will travel into uncharted territory.

The flexibility to use either thousands of qubits for shorter computations (up to 10k gates) or hundreds of qubits for longer computations (from 1 million to 1 billion gates) make Apollo a versatile machine with unprecedented quantum computational power. We expect the first application areas will be in scientific discovery; particularly the simulation of quantum systems. While this may sound academic, this is how all new material discovery begins and its value should not be understated. This era will lead to discoveries in materials science, high-temperature superconductivity, complex magnetic systems, phase transitions, and high energy physics, among other things.

In general, Apollo will advance the field of physics to new heights while we start to see the first glimmers of distinct progress in chemistry and biology. For some of these applications, users will employ Apollo in a mode where it offers thousands of qubits for relatively short computations; e.g. exploring the magnetism of materials. At other times, users may want to employ significantly longer computations for applications like chemistry or topological data analysis.

But there is more on the horizon. Carefully crafted AI models that interact seamlessly with Apollo will be able to squeeze all the “quantum juice” out and generate data that was hitherto unavailable to mankind. We anticipate using this data to further the field of AI itself, as it can be used as training data.

The era of scientific (quantum) discovery and exploration will inevitably lead to commercial value. Apollo will be the centerpiece of this commercial tipping point where use-cases will build on the value of scientific discovery and support highly innovative commercially viable products.

Very interestingly, we will uncover applications that we are currently unaware of. As is always the case with disruptive new technology, Apollo will run currently unknown use-cases and applications that will make perfect sense once we see them. We are eager to co-develop these with our customers in our unique co-creation program.

How do we get there?

Today, System Model H2 is our most advanced commercial quantum computer, providing 56 physical qubits with physical two-qubit gate errors less than 10-3. System Model H2, like all our systems, is based on the QCCD architecture.

Starting from where we are today, our roadmap progresses through two additional machines prior to Apollo. The Quantinuum Helios™ system, which we are releasing in 2025, will offer around 100 physical qubits with two-qubit gate errors less than 5x10-4. In addition to expanded qubit count and better errors, Helios makes two departures from H2. First, Helios will use 137Ba+ qubits in contrast to the 171Yb+ qubits used in our H1 and H2 systems. This change enables lower two-qubit gate errors and less complex laser systems with lower cost. Second, for the first time in a commercial system, Helios will use junction-based qubit routing. The result will be a “twice-as-good" system: Helios will offer roughly 2x more qubits with 2x lower two-qubit gate errors while operating more than 2x faster than our 56-qubit H2 system.

After Helios we will introduce Quantinuum Sol™, our first commercially available 2D-grid-based quantum computer. Sol will offer hundreds of physical qubits with two-qubit gate errors less than 2x10-4, operating approximately 2x faster than Helios. Sol being a fully 2D-grid architecture is the scalability launching point for the significant size increase planned for Apollo.

Opportunity for early value creation discovery in Helios and Sol

Thanks to Sol’s low error rates, users will be able to execute circuits with up to 10,000 quantum operations. The usefulness of Helios and Sol may be extended with a combination of quantum error detection (QED) and quantum error mitigation (QEM). For example, the [[k+2, k, 2]] iceberg code is a light-weight QED code that encodes k+2 physical qubits into k logical qubits and only uses an additional 2 ancilla qubits. This low-overhead code is well-suited for Helios and Sol because it offers the non-Clifford variable angle entangling ZZ-gate directly without the overhead of magic state distillation. The errors Iceberg fails to detect are already ~10x lower than our physical errors, and by applying a modest run-time overhead to discard detected failures, the effective error in the computation can be further reduced. Combining QED with QEM, a ~10x reduction in the effective error may be possible while maintaining run-time overhead at modest levels and below that of full-blown QEC.

Why accelerate our roadmap now?

Our new roadmap is an acceleration over what we were previously planning. The benefits of this are obvious: Apollo brings the commercial tipping point sooner than we previously thought possible. This acceleration is made possible by a set of recent breakthroughs.

First, we solved the “wiring problem”: we demonstrated that trap chip control is scalable using our novel center-to-left-right (C2LR) protocol and broadcasting shared control signals to multiple electrodes. This demonstration of qubit rearrangement in a 2D geometry marks the most advanced ion trap built, containing approximately 40 junctions. This trap was deployed to 3 different testbeds in 2 different cities and operated with 2 different collections of dual-ion-species, and all 3 cases were a success. These demonstrations showed that the footprint of the most complex parts of the trap control stay constant as the number of qubits scales up. This gives us the confidence that Sol, with approximately 100 junctions, will be a success.

Second, we continue to reduce our two-qubit physical gate errors. Today, H1 and H2 have two-qubit gate errors less than 1x10-3 across all pairs of qubits. This is the best in the industry and is a key ingredient in our record >2 million quantum volume. Our systems are the most benchmarked in the industry, and we stand by our data - making it all publicly available. Recently, we observed an 8x10-4 two-qubit gate error in our Helios development test stand in 137Ba+, and we’ve seen even better error rates in other testbeds. We are well on the path to meeting the 5x10-4 spec in Helios next year.

Third, the all-to-all connectivity offered by our systems enables highly efficient QEC codes. In Microsoft’s recent demonstration, our H2 system with 56 physical qubits was used to generate 12 logical qubits at distance 4. This work demonstrated several experiments, including repeated rounds of error correction where the error in the final result was ~10x lower than the physical circuit baseline.

In conclusion, through a combination of advances in hardware readiness and QEC, we have line-of-sight to Apollo by the end of the decade, a fully fault-tolerant quantum advantaged machine. This will be a commercial tipping point: ushering in an era of scientific discovery in physics, materials, chemistry, and more. Along the way, users will have the opportunity to discover new enabling use cases through quantum error detection and mitigation in Helios and Sol.

Quantinuum has the best quantum computers today and is on the path to offering fault-tolerant useful quantum computation by the end of the decade.