Technical perspective: By the end of the decade, we will deliver universal, fully fault-tolerant quantum computing

By Dr. Harry Buhrman, Chief Scientist for Algorithms and Innovation, and Dr. Chris Langer, Fellow

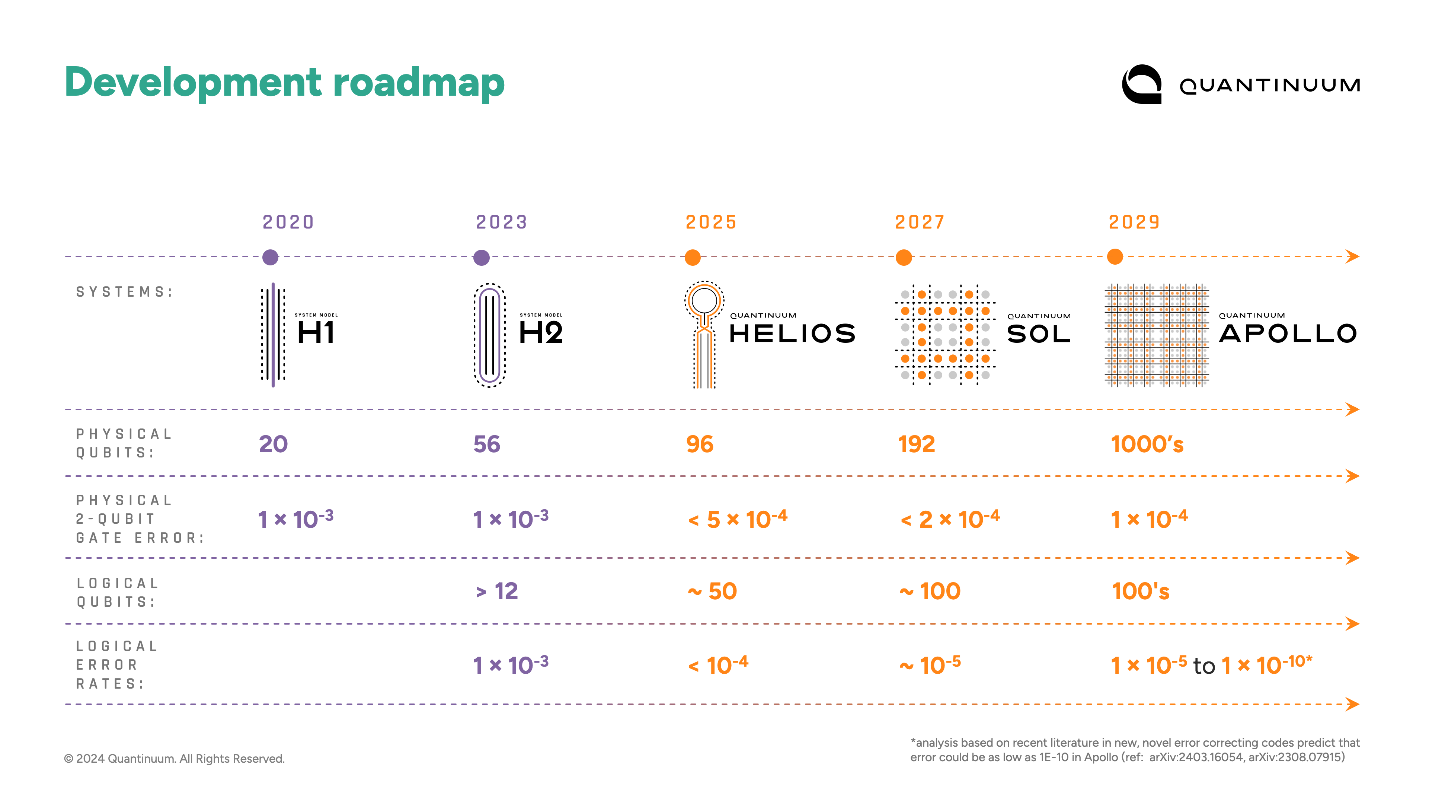

This week, we confirm what has been implied by the rapid pace of our recent technical progress as we reveal a major acceleration in our hardware road map. By the end of the decade, our accelerated hardware roadmap will deliver a fully fault-tolerant and universal quantum computer capable of executing millions of operations on hundreds of logical qubits.

The next major milestone on our accelerated roadmap is Quantinuum Helios™, Powered by Honeywell, a device that will definitively push beyond classical capabilities in 2025. That sets us on a path to our fifth-generation system, Quantinuum Apollo™, a machine that delivers scientific advantage and a commercial tipping point this decade.

What is Apollo?

We are committed to continually advancing the capabilities of our hardware over prior generations, and Apollo makes good on that promise. It will offer:

- thousands of physical qubits

- physical error rates less than 10-4

- All of our most competitive features: all-to-all connectivity, low crosstalk, mid-circuit measurement and qubit re-use

- Conditional logic

- Real-time classical co-compute

- Physical variable angle 1 qubit and 2 qubit gates

- Hundreds of logical qubits

- Logical error rates better than 10-6 with analysis based on recent literature estimating as low as 10-10

By leveraging our all-to-all connectivity and low error rates, we expect to enjoy significant efficiency gains in terms of fault-tolerance, including single-shot error correction (which saves time) and high-rate and high-distance Quantum Error Correction (QEC) codes (which mean more logical qubits, with stronger error correction capabilities, can be made from a smaller number of physical qubits).

Studies of several efficient QEC codes already suggest we can enjoy logical error rates much lower than our target 10-6 – we may even be able to reach 10-10, which enables exploration of even more complex problems of both industrial and scientific interest.

Error correcting code exploration is only just beginning – we anticipate discoveries of even more efficient codes. As new codes are developed, Apollo will be able to accommodate them, thanks to our flexible high-fidelity architecture. The bottom line is that Apollo promises fault-tolerant quantum advantage sooner, with fewer resources.

Like all our computers, Apollo is based on the quantum charged coupled device (QCCD) architecture. Here, each qubit’s information is stored in the atomic states of a single ion. Laser beams are applied to the qubits to perform operations such as gates, initialization, and measurement. The lasers are applied to individual qubits or co-located qubit pairs in dedicated operation zones. Qubits are held in place using electromagnetic fields generated by our ion trap chip. We move the qubits around in space by dynamically changing the voltages applied to the chip. Through an alternating sequence of qubit rearrangements via movement followed by quantum operations, arbitrary circuits with arbitrary connectivity can be executed.

The ion trap chip in Apollo will host a 2D array of trapping locations. It will be fabricated using standard CMOS processing technology and controlled using standard CMOS electronics. The 2D grid architecture enables fast and scalable qubit rearrangement and quantum operations – a critical competitive advantage. The Apollo architecture is scalable to the significantly larger systems we plan to deliver in the next decade.

What is Apollo good for?

Apollo’s scaling of very stable physical qubits and native high-fidelity gates, together with our advanced error correcting and fault tolerant techniques will establish a quantum computer that can perform tasks that do not run (efficiently) on any classical computer. We already had a first glimpse of this in our recent work sampling the output of random quantum circuits on H2, where we performed 100x better than competitors who performed the same task while using 30,000x less power than a classical supercomputer. But with Apollo we will travel into uncharted territory.

The flexibility to use either thousands of qubits for shorter computations (up to 10k gates) or hundreds of qubits for longer computations (from 1 million to 1 billion gates) make Apollo a versatile machine with unprecedented quantum computational power. We expect the first application areas will be in scientific discovery; particularly the simulation of quantum systems. While this may sound academic, this is how all new material discovery begins and its value should not be understated. This era will lead to discoveries in materials science, high-temperature superconductivity, complex magnetic systems, phase transitions, and high energy physics, among other things.

In general, Apollo will advance the field of physics to new heights while we start to see the first glimmers of distinct progress in chemistry and biology. For some of these applications, users will employ Apollo in a mode where it offers thousands of qubits for relatively short computations; e.g. exploring the magnetism of materials. At other times, users may want to employ significantly longer computations for applications like chemistry or topological data analysis.

But there is more on the horizon. Carefully crafted AI models that interact seamlessly with Apollo will be able to squeeze all the “quantum juice” out and generate data that was hitherto unavailable to mankind. We anticipate using this data to further the field of AI itself, as it can be used as training data.

The era of scientific (quantum) discovery and exploration will inevitably lead to commercial value. Apollo will be the centerpiece of this commercial tipping point where use-cases will build on the value of scientific discovery and support highly innovative commercially viable products.

Very interestingly, we will uncover applications that we are currently unaware of. As is always the case with disruptive new technology, Apollo will run currently unknown use-cases and applications that will make perfect sense once we see them. We are eager to co-develop these with our customers in our unique co-creation program.

How do we get there?

Today, System Model H2 is our most advanced commercial quantum computer, providing 56 physical qubits with physical two-qubit gate errors less than 10-3. System Model H2, like all our systems, is based on the QCCD architecture.

Starting from where we are today, our roadmap progresses through two additional machines prior to Apollo. The Quantinuum Helios™ system, which we are releasing in 2025, will offer around 100 physical qubits with two-qubit gate errors less than 5x10-4. In addition to expanded qubit count and better errors, Helios makes two departures from H2. First, Helios will use 137Ba+ qubits in contrast to the 171Yb+ qubits used in our H1 and H2 systems. This change enables lower two-qubit gate errors and less complex laser systems with lower cost. Second, for the first time in a commercial system, Helios will use junction-based qubit routing. The result will be a “twice-as-good" system: Helios will offer roughly 2x more qubits with 2x lower two-qubit gate errors while operating more than 2x faster than our 56-qubit H2 system.

After Helios we will introduce Quantinuum Sol™, our first commercially available 2D-grid-based quantum computer. Sol will offer hundreds of physical qubits with two-qubit gate errors less than 2x10-4, operating approximately 2x faster than Helios. Sol being a fully 2D-grid architecture is the scalability launching point for the significant size increase planned for Apollo.

Opportunity for early value creation discovery in Helios and Sol

Thanks to Sol’s low error rates, users will be able to execute circuits with up to 10,000 quantum operations. The usefulness of Helios and Sol may be extended with a combination of quantum error detection (QED) and quantum error mitigation (QEM). For example, the [[k+2, k, 2]] iceberg code is a light-weight QED code that encodes k+2 physical qubits into k logical qubits and only uses an additional 2 ancilla qubits. This low-overhead code is well-suited for Helios and Sol because it offers the non-Clifford variable angle entangling ZZ-gate directly without the overhead of magic state distillation. The errors Iceberg fails to detect are already ~10x lower than our physical errors, and by applying a modest run-time overhead to discard detected failures, the effective error in the computation can be further reduced. Combining QED with QEM, a ~10x reduction in the effective error may be possible while maintaining run-time overhead at modest levels and below that of full-blown QEC.

Why accelerate our roadmap now?

Our new roadmap is an acceleration over what we were previously planning. The benefits of this are obvious: Apollo brings the commercial tipping point sooner than we previously thought possible. This acceleration is made possible by a set of recent breakthroughs.

First, we solved the “wiring problem”: we demonstrated that trap chip control is scalable using our novel center-to-left-right (C2LR) protocol and broadcasting shared control signals to multiple electrodes. This demonstration of qubit rearrangement in a 2D geometry marks the most advanced ion trap built, containing approximately 40 junctions. This trap was deployed to 3 different testbeds in 2 different cities and operated with 2 different collections of dual-ion-species, and all 3 cases were a success. These demonstrations showed that the footprint of the most complex parts of the trap control stay constant as the number of qubits scales up. This gives us the confidence that Sol, with approximately 100 junctions, will be a success.

Second, we continue to reduce our two-qubit physical gate errors. Today, H1 and H2 have two-qubit gate errors less than 1x10-3 across all pairs of qubits. This is the best in the industry and is a key ingredient in our record >2 million quantum volume. Our systems are the most benchmarked in the industry, and we stand by our data - making it all publicly available. Recently, we observed an 8x10-4 two-qubit gate error in our Helios development test stand in 137Ba+, and we’ve seen even better error rates in other testbeds. We are well on the path to meeting the 5x10-4 spec in Helios next year.

Third, the all-to-all connectivity offered by our systems enables highly efficient QEC codes. In Microsoft’s recent demonstration, our H2 system with 56 physical qubits was used to generate 12 logical qubits at distance 4. This work demonstrated several experiments, including repeated rounds of error correction where the error in the final result was ~10x lower than the physical circuit baseline.

In conclusion, through a combination of advances in hardware readiness and QEC, we have line-of-sight to Apollo by the end of the decade, a fully fault-tolerant quantum advantaged machine. This will be a commercial tipping point: ushering in an era of scientific discovery in physics, materials, chemistry, and more. Along the way, users will have the opportunity to discover new enabling use cases through quantum error detection and mitigation in Helios and Sol.

Quantinuum has the best quantum computers today and is on the path to offering fault-tolerant useful quantum computation by the end of the decade.

About Quantinuum

Quantinuum, the world’s largest integrated quantum company, pioneers powerful quantum computers and advanced software solutions. Quantinuum’s technology drives breakthroughs in materials discovery, cybersecurity, and next-gen quantum AI. With over 500 employees, including 370+ scientists and engineers, Quantinuum leads the quantum computing revolution across continents.

Typically, Quantum Error Detection (QED) is viewed as a short-term solution—a non-scalable, stop-gap until full fault tolerance is achieved at scale.

That’s just changed, thanks to a serendipitous discovery made by our team. Now, QED can be used in a much wider context than previously thought. Our team made this discovery while studying the contact process, which describes things like how diseases spread or how water permeates porous materials. In particular, our team was studying the quantum contact process (QCP), a problem they had tackled before, which helps physicists understand things like phase transitions. In the process (pun intended), they came across what senior advanced physicist, Eli Chertkov, described as “a surprising result.”

While examining the problem, the team realized that they could convert detected errors due to noisy hardware into random resets, a key part of the QCP, thus avoiding the exponentially costly overhead of post-selection normally expected in QED.

To understand this better, the team developed a new protocol in which the encoded, or logical, quantum circuit adapts to the noise generated by the quantum computer. They quickly realized that this method could be used to explore other classes of random circuits similar to the ones they were already studying.

The team put it all together on System Model H2 to run a complex simulation, and were surprised to find that they were able to achieve near break-even results, where the logically encoded circuit performed as well as its physical analog, thanks to their clever application of QED. Ultimately, this new protocol will allow QED codes to be used in a scalable way, saving considerable computational resources compared to full quantum error correction (QEC).

Researchers at the crossroads of quantum information, quantum simulation, and many-body physics will take interest in this protocol and use it as a springboard for inventing new use cases for QED.

Stay tuned for more, our team always has new tricks up their sleeves.

Learn mode about System Model H2 with this video:

By Konstantinos Meichanetzidis

When will quantum computers outperform classical ones?

This question has hovered over the field for decades, shaping billion-dollar investments and driving scientific debate.

The question has more meaning in context, as the answer depends on the problem at hand. We already have estimates of the quantum computing resources needed for Shor’s algorithm, which has a superpolynomial advantage for integer factoring over the best-known classical methods, threatening cryptographic protocols. Quantum simulation allows one to glean insights into exotic materials and chemical processes that classical machines struggle to capture, especially when strong correlations are present. But even within these examples, estimates change surprisingly often, carving years off expected timelines. And outside these famous cases, the map to quantum advantage is surprisingly hazy.

Researchers at Quantinuum have taken a fresh step toward drawing this map. In a new theoretical framework, Harry Buhrman, Niklas Galke, and Konstantinos Meichanetzidis introduce the concept of “queasy instances” (quantum easy) – problem instances that are comparatively easy for quantum computers but appear difficult for classical ones.

From Problem Classes to Problem Instances

Traditionally, computer scientists classify problems according to their worst-case difficulty. Consider the problem of Boolean satisfiability, or SAT, where one is given a set of variables (each can be assigned a 0 or a 1) and a set of constraints and must decide whether there exists a variable assignment that satisfies all the constraints. SAT is a canonical NP-complete problem, and so in the worst case, both classical and quantum algorithms are expected to perform badly, which means that the runtime scales exponentially with the number of variables. On the other hand, factoring is believed to be easier for quantum computers than for classical ones. But real-world computing doesn’t deal only in worst cases. Some instances of SAT are trivial; others are nightmares. The same is true for optimization problems in finance, chemistry, or logistics. What if quantum computers have an advantage not across all instances, but only for specific “pockets” of hard instances? This could be very valuable, but worst-case analysis is oblivious to this and declares that there is no quantum advantage.

To make that idea precise, the researchers turned to a tool from theoretical computer science: Kolmogorov complexity. This is a way of measuring how “regular” a string of bits is, based on the length of the shortest program that generates it. A simple string like 0000000000 can be described by a tiny program (“print ten zeros”), while the description of a program that generates a random string exhibiting no pattern is as long as the string itself. From there, the notion of instance complexity was developed: instead of asking “how hard is it to describe this string?”, we ask “how hard is it to solve this particular problem instance (represented by a string)?” For a given SAT formula, for example, its polynomial-time instance complexity is the size of the smallest program that runs in polynomial time and decides whether the formula is satisfiable. This smallest program must be consistently answering all other instances, and it is also allowed to declare “I don’t know”.

In their new work, the team extends this idea into the quantum realm by defining polynomial-time quantum instance complexity as the size of the shortest quantum program that solves a given instance and runs on polynomial time. This makes it possible to directly compare quantum and classical effort, in terms of program description length, on the very same problem instance. If the quantum description is significantly shorter than the classical one, that problem instance is one the researchers call “queasy”: quantum-easy and classically hard. These queasy instances are the precise places where quantum computers offer a provable advantage – and one that may be overlooked under a worst-case analysis.

Why “Queasy”?

The playful name captures the imbalance between classical and quantum effort. A queasy instance is one that makes classical algorithms struggle, i.e. their shortest descriptions of efficient programs that decide them are long and unwieldy, while a quantum computer can handle the same instance with a much simpler, faster, and shorter program. In other words, these instances make classical computers “queasy,” while quantum ones solve them efficiently and finding them quantum-easy. The key point of these definitions lies in demonstrating that they yield reasonable results for well-known optimisation problems.

By carefully analysing a mapping from the problem of integer factoring to SAT (which is possible because factoring is inside NP and SAT is NP-complete) the researchers prove that there exist infinitely many queasy SAT instances. SAT is one of the most central and well-studied problems in computer science that finds numerous applications in the real-world. The significant realisation that this theoretical framework highlights is that SAT is not expected to yield a blanket quantum advantage, but within it lie islands of queasiness – special cases where quantum algorithms decisively win.

Algorithmic Utility

Finding a queasy instance is exciting in itself, but there is more to this story. Surprisingly, within the new framework it is demonstrated that when a quantum algorithm solves a queasy instance, it does much more than solve that single case. Because the program that solves it is so compact, the same program can provably solve an exponentially large set of other instances, as well. Interestingly, the size of this set depends exponentially on the queasiness of the instance!

Think of it like discovering a special shortcut through a maze. Once you’ve found the trick, it doesn’t just solve that one path, but reveals a pattern that helps you solve many other similarly built mazes, too (even if not optimally). This property is called algorithmic utility, and it means that queasy instances are not isolated curiosities. Each one can open a doorway to a whole corridor with other doors, behind which quantum advantage might lie.

A North Star for the Field

Queasy instances are more than a mathematical curiosity; this is a new framework that provides a language for quantum advantage. Even though the quantities defined in the paper are theoretical, involving Turing machines and viewing programs as abstract bitstrings, they can be approximated in practice by taking an experimental and engineering approach. This work serves as a foundation for pursuing quantum advantage by targeting problem instances and proving that in principle this can be a fruitful endeavour.

The researchers see a parallel with the rise of machine learning. The idea of neural networks existed for decades along with small scale analogue and digital implementations, but only when GPUs enabled large-scale trial and error did they explode into practical use. Quantum computing, they suggest, is on the cusp of its own heuristic era. “Quristics” will be prominent in finding queasy instances, which have the right structure so that classical methods struggle but quantum algorithms can exploit, to eventually arrive at solutions to typical real-world problems. After all, quantum computing is well-suited for small-data big-compute problems, and our framework employs the concepts to quantify that; instance complexity captures both their size and the amount of compute required to solve them.

Most importantly, queasy instances shift the conversation. Instead of asking the broad question of when quantum computers will surpass classical ones, we can now rigorously ask where they do. The queasy framework provides a language and a compass for navigating the rugged and jagged computational landscape, pointing researchers, engineers, and industries toward quantum advantage.

From September 16th – 18th, Quantum World Congress (QWC) brought together visionaries, policymakers, researchers, investors, and students from across the globe to discuss the future of quantum computing in Tysons, Virginia.

Quantinuum is forging the path to universal, fully fault-tolerant quantum computing with our integrated full-stack. With our quantum experts were on site, we showcased the latest on Quantinuum Systems, the world’s highest-performing, commercially available quantum computers, our new software stack featuring the key additions of Guppy and Selene, our path to error correction, and more.

Highlights from QWC

Dr. Patty Lee Named the Industry Pioneer in Quantum

The Quantum Leadership Awards celebrate visionaries transforming quantum science into global impact. This year at QWC, Dr. Patty Lee, our Chief Scientist for Hardware Technology Development, was named the Industry Pioneer in Quantum! This honor celebrates her more than two decades of leadership in quantum computing and her pivotal role advancing the world’s leading trapped-ion systems. Watch the Award Ceremony here.

Keynote with Quantinuum's CEO, Dr. Rajeeb Hazra

At QWC 2024, Quantinuum’s President & CEO, Dr. Rajeeb “Raj” Hazra, took the stage to showcase our commitment to advancing quantum technologies through the unveiling of our roadmap to universal, fully fault-tolerant quantum computing by the end of this decade. This year at QWC 2025, Raj shared the progress we’ve made over the last year in advancing quantum computing on both commercial and technical fronts and exciting insights on what’s to come from Quantinuum. Access the full session here.

Panel Session: Policy Priorities for Responsible Quantum and AI

As part of the Track Sessions on Government & Security, Quantinuum’s Director of Government Relations, Ryan McKenney, discussed “Policy Priorities for Responsible Quantum and AI” with Jim Cook from Actions to Impact Strategies and Paul Stimers from Quantum Industry Coalition.

Fireside Chat: Establishing a Pro-Innovation Regulatory Framework

During the Track Session on Industry Advancement, Quantinuum’s Chief Legal Officer, Kaniah Konkoly-Thege, and Director of Government Relations, Ryan McKenney, discussed the importance of “Establishing a Pro-Innovation Regulatory Framework”.