Japan has made bold, strategic investments in both high-performance computing (HPC) and quantum technologies. As these capabilities mature, an important question arises for policymakers and research leaders: how do we move from building advanced machines to demonstrating meaningful, integrated use?

Last year, Quantinuum installed its Reimei quantum computer at a world-class facility in Japan operated by RIKEN, the country’s largest comprehensive research institution. The system was integrated with Japan’s famed supercomputer Fugaku, one of the most powerful in the world, as part of an ambitious national project commissioned by the New Energy and Industrial Technology Development Organization (NEDO), the national research and development entity under the Ministry of Economy, Trade and Industry.

Now, for the first time, a full scientific workflow has been executed across Fugaku, one of the world’s most powerful supercomputers, and Reimei, our trapped-ion quantum computer. This marks a transition from infrastructure development to practical deployment.

Quantum Biology

In this first foray into hybrid HPC-quantum computation, the team explored chemical reactions that occur inside biomolecules such as proteins. Reactions of this type are found throughout biology, from enzyme functions to drug interactions.

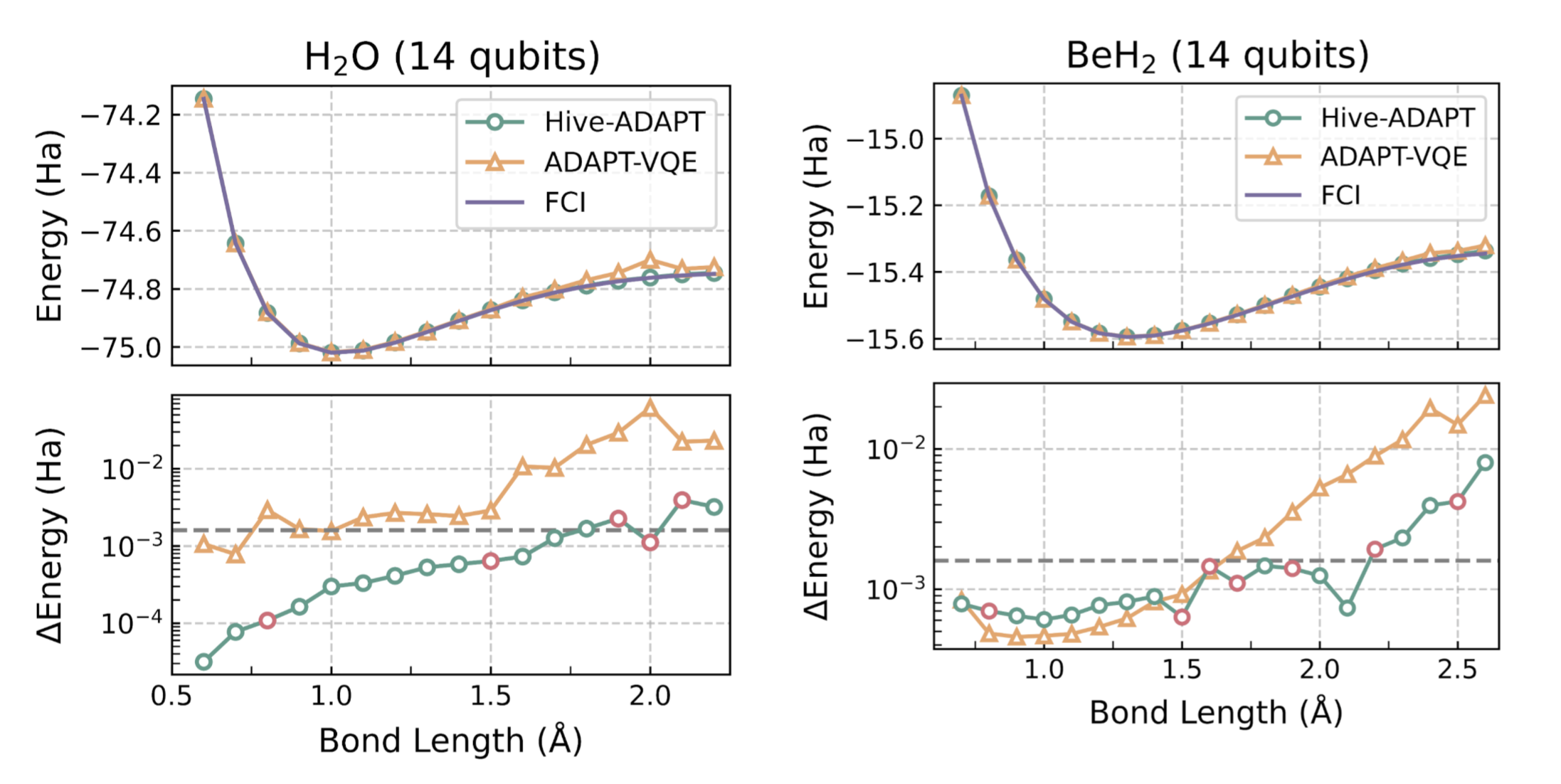

Simulating such reactions accurately is extremely challenging. The region where the chemical reaction occurs—the “active site”—requires very high precision, because subtle electronic effects determine the outcome. At the same time, this active site is embedded within a much larger molecular environment that must also be represented, though typically at a lower level of detail.

To address this complexity, computational chemistry has long relied on layered approaches, in which different parts of a system are treated with different methods. In our work, we extended this concept into the hybrid computing era by combining classical supercomputing with quantum computing.

Shifting the Paradigm

While the long-term goal of quantum computing is to outperform classical approaches alone, the purpose of this project was to demonstrate a fully functional hybrid system working as an end-to-end platform for real scientific applications. We believe it is not enough to develop hardware in isolation – we must also build workflows where classical and quantum resources create a whole that is greater than the parts. We believe this is a crucial step for our industry; large-scale national investments in quantum computing must ultimately show how the technology can be embedded within existing research infrastructure.

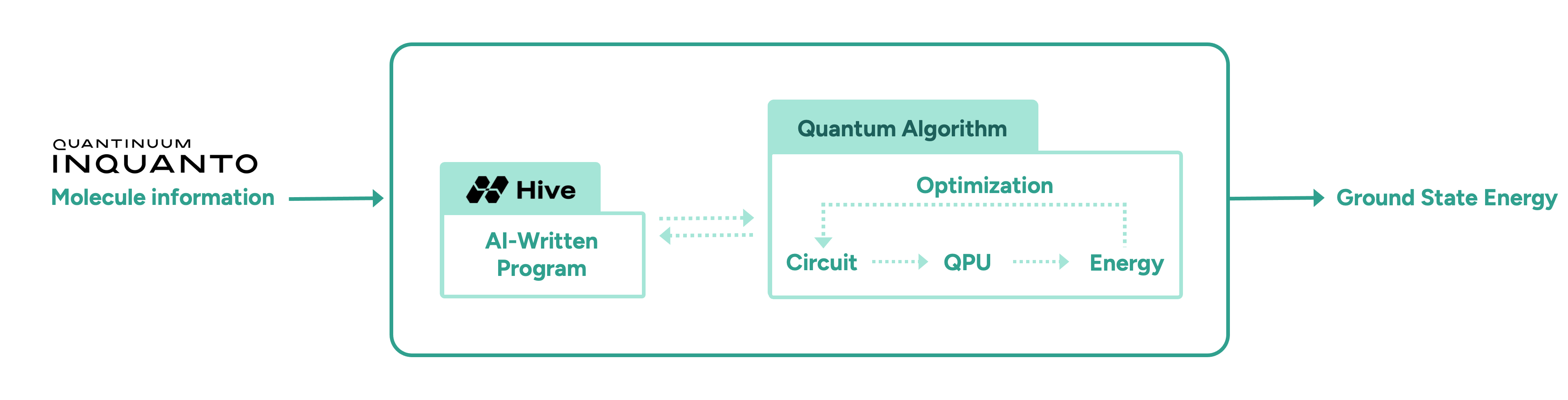

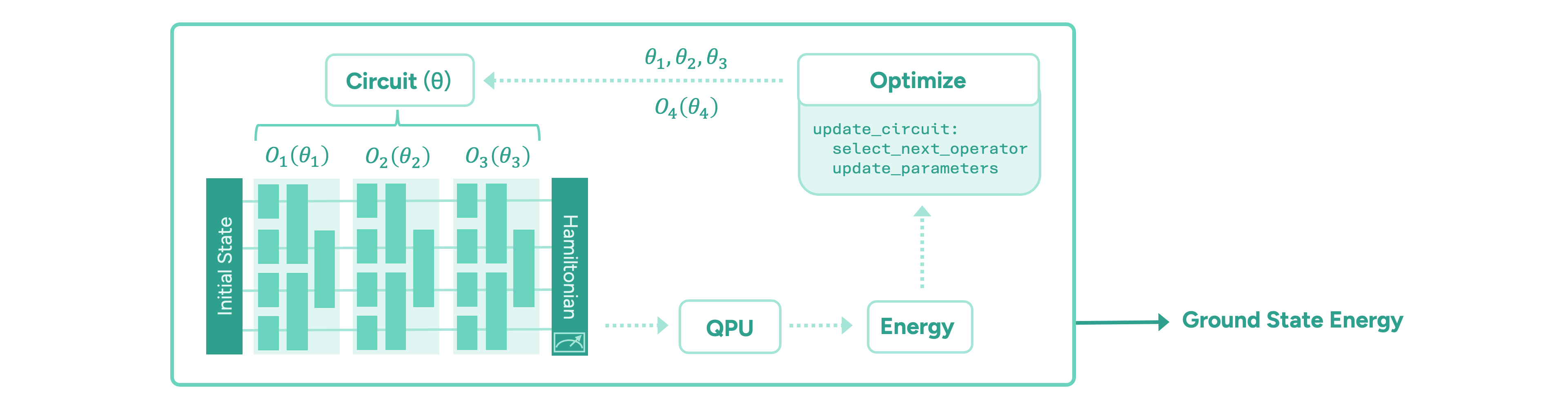

In this work, the supercomputer Fugaku handled geometry optimization and baseline electronic structure calculations. The quantum computer Reimei was used to enhance the treatment of the most difficult electronic interactions in the active site, those that are known to challenge conventional approximate methods. The entire process was coordinated through Quantinuum’s workflow system Tierkreis, which allows jobs to move efficiently between machines.

Hybrid Computation is Now an Operational Reality

With this infrastructure in place, we are now poised to truly leverage the power of quantum computing. In this instance, the researchers designed the algorithm to specifically exploit the strengths of both the quantum and the classical hardware.

First, the classical computer constructs an approximate description of the molecular system. Then, the quantum computer is used to model the detailed quantum mechanics that the classical computer can’t handle. Together, this improves accuracy, extending the utility of the classical system.

A Path to Hybrid Advantage

Accurate simulation of biomolecular reactions remains one of the major challenges in biochemistry. Although the present study uses simplified systems to focus on methodology, it lays the groundwork for future applications in drug design, enzyme engineering, and photoactive biological systems.

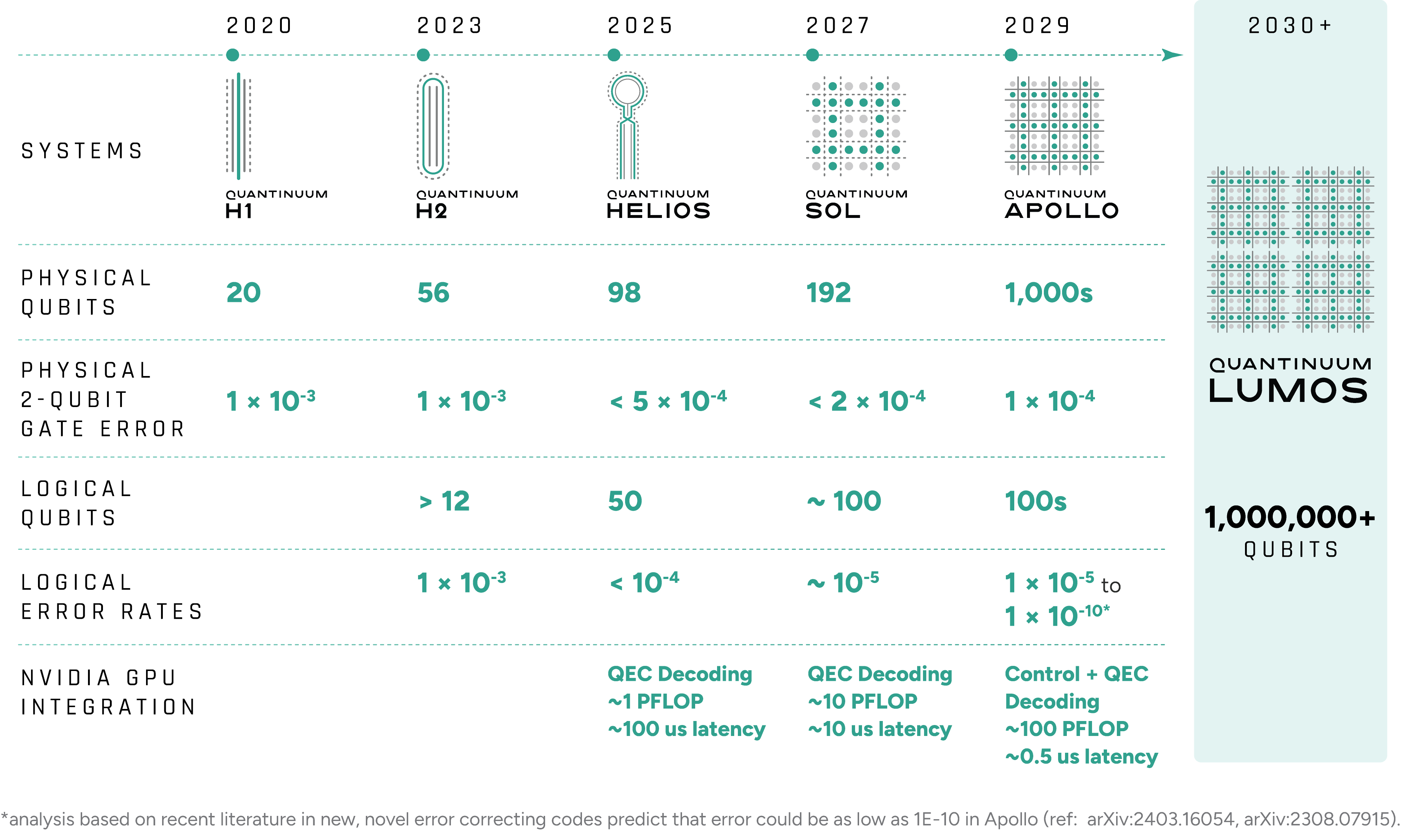

While fully fault-tolerant, large-scale quantum computers are still under development, hybrid approaches allow today’s quantum hardware to augment powerful classical systems, such as Fugaku, to explore meaningful applications. As quantum technology matures, the same workflows can scale accordingly.

High-performance computing centers worldwide are actively exploring how quantum devices might integrate into their ecosystems. By demonstrating coordinated job scheduling, direct hardware access, and workflow orchestration across heterogeneous architectures, this work offers a concrete example of how such integration can be achieved.

As quantum hardware matures, we believe the algorithms and workflows developed here can be extended to increasingly realistic and industrially relevant problems. For Japan’s research ecosystem, this first application milestone signals that hybrid quantum–supercomputing is moving from ambition to implementation.